Time Series Forecasting : Temporal Convolutional Networks vs. AutoML’s XGBoost Regression.

So, temporal convolutional networks are now being compared against canonical recurrent networks with LSTMs and GRUs to see what works better. It seems that more and more people are siding with the TCNs than with the RNNs.

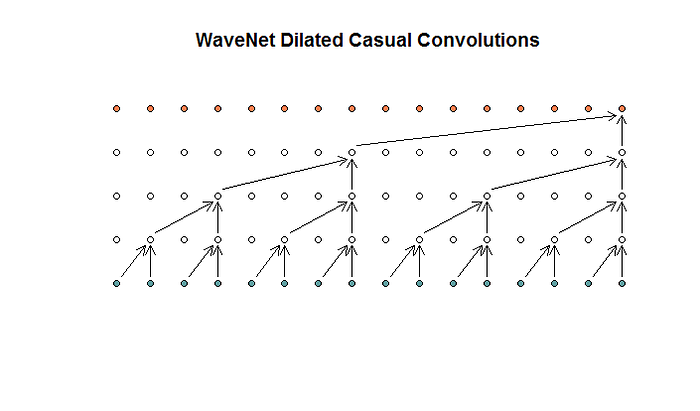

Phillipe Remy has created a sweet and simple TCN package called keras-tcn that makes creating TCNs with keras/tensorflow a breeze. Choose an activation, choose the number of filters, residual stacks, and the activations — or use the default settings, and add another layer for your task. In my case, I was experimenting with one-step ahead time series forecasting, based on a lag of 20, meaning I am trying to predict one value based on the previous 20 values, so it’s kind of an autoregressive model. Or to the deep learning people, it might be thought of as a seq2seq model, with input sequences of 20 values and output sequences of 1 value, the (dilated) convolutional layers enlargening the receptive field so that, without too much extra cost, it can cover the entire sequence it’s working with. Bottom line, it’s supposed to be as good or better than RNNs, at a fraction of the price.

Datasets-ECG.

One of the data sets I worked with is a 10,000 point time series of ECG data from the MIT-BIH Normal Sinus Rhythm Database. The subjects that participated in this study didn’t have any significant arrhythmias. I used a sigmoid based attention mechanism in the beginning, followed by a TCN from keras-tcn. I compared this against the performance of the models and predictions from the autoML package, set to use XGBoost.

I trained on about ~9k of the points and used ~1k for out of sample forecasting. The R2 (coefficient of determination) and the explained variance score were in the neighborhood of 92%, while the Kling-Gupta efficiency score was above 93%. The Wilmott index of agreement came out to almost 98% (1 being perfect agreement), so it seems this model is pretty decent.

In contrast, XGBoost came up with about 90% for the R2 and explained variance score. The Kling-Gupta efficiency was almost 88%, and the Wilmott index of agreement was over 97%. To be more precise, the Wilmott index for the TCN was .978 for the TCN and .972 for the XGBoost model. So TCN does outperform XGBoost in predictions, though not by what I would consider a huge, earth-shattering amount.

Datasets: Group Sunspot Number

This one proved to be a win for XGBoost and TCN. Again, this was a ~10000 point time series of group sunspot number. Using TCN with an attention mechanism in the beginning (sigmoid nonlinearities, to weight the input features), the R2 and explained variance scores were about 82% and 87%, respectively. The Kling-Gupta number was about 87% and the Wilmott index of agreement was about 95%. However, the XGBoost model from autoML did quite well, with R2 and explained variance scores ~ 88%; Kling-Gupta efficiency was 93% and the Wilmott index about 97%. If you just looked at Wilmott index of agreement, there wasn’t a huge difference, but the difference in R2 was fairly big as was the Kling-Gupta difference between the two models. Here’s a Google Colab notebook with some of the calculations as well as the specifics of the models. The AutoML side involved no tuning of hyperparameters, this was straight out of the box performance.